Technology is advancing at an unbelievable pace, changing the way we live, work and interact with each other. But have you ever wondered why gadgets become smaller, faster and cheaper every year? The answer lies in Moore’s Law – a famous observation made by Gordon Moore that has governed the technology industry for over 50 years. In this blog post, we’ll explore what Moore’s Law is all about, its impact on technology and what the future holds for this groundbreaking principle. So grab your coffee and join us as we delve into the fascinating world of Moore’s Law!

What is Moore’s Law?

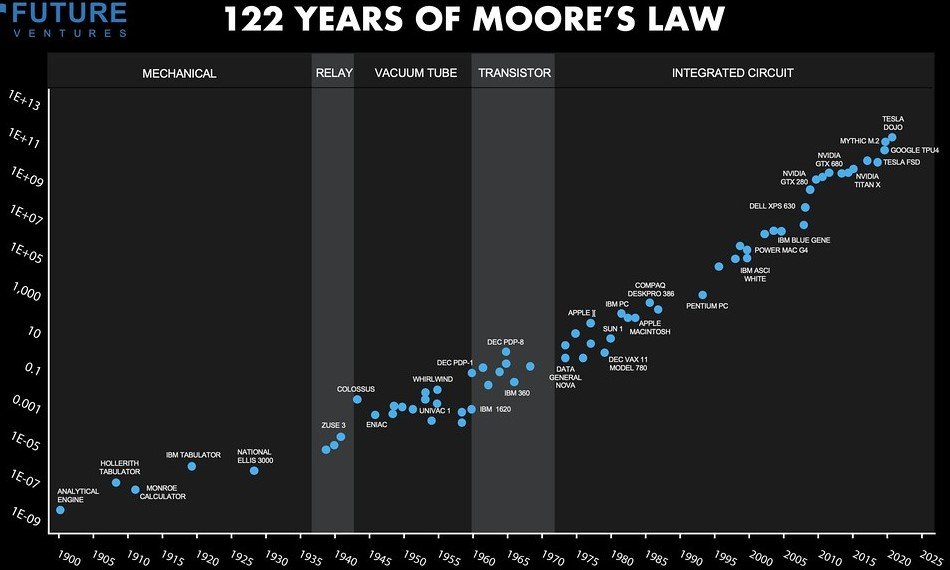

Moore’s Law is a principle that states the number of transistors on a microchip would double every two years. It was first introduced by Gordon Moore in 1965, who observed that the number of components per integrated circuit had doubled every year since their invention in 1958.

The law predicts an exponential increase in computing power as more and more transistors are added to a chip, leading to smaller, faster and cheaper electronics. This has resulted in some remarkable advancements over the years such as smartphones, laptops and smartwatches being able to perform multiple tasks at once while using less power than we ever thought possible.

While some critics argue that Moore’s Law may eventually come to an end due to physical limitations or rising production costs, it remains one of the most influential guiding principles for technology companies worldwide.

The History of Moore’s Law

In the early 1960s, a young engineer named Gordon Moore was working for Fairchild Semiconductor when he noticed a trend in the development of computer chips. He observed that every year or so, the number of transistors on a chip was doubling while its cost per transistor decreased.

Moore’s observation became known as “Moore’s Law”, and it quickly gained attention within the tech industry. In 1975, Moore himself predicted that this trend would continue for at least ten more years.

Over time, Moore’s Law proved to be remarkably accurate. As technology advanced and manufacturing processes improved, chipmakers were able to fit an increasing number of transistors onto each chip while simultaneously reducing their size and cost.

As a result of this exponential growth in computing power, we’ve seen rapid advancements in fields such as artificial intelligence (AI), virtual reality (VR), and big data analytics.